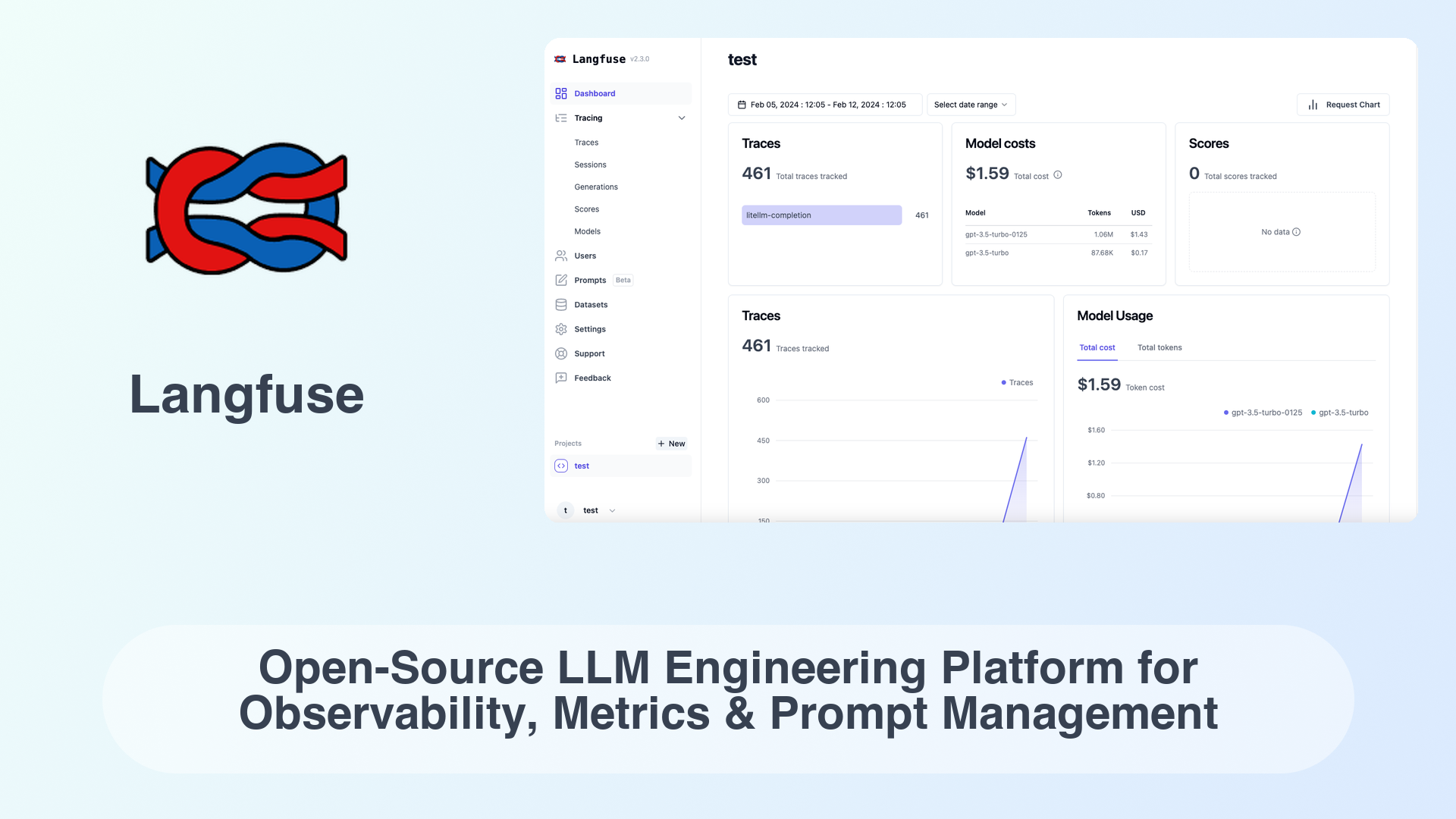

In the rapidly evolving world of AI and machine learning, managing large language models (LLMs) can be a daunting task. From ensuring observability to managing prompts and tracking metrics, the complexity can quickly become overwhelming. Enter Langfuse, an open-source LLM engineering platform designed to simplify these challenges. In this blog post, we’ll dive deep into what makes Langfuse a standout choice for developers and businesses, and how it compares to other tools in the market.

What is Langfuse?

Langfuse is an open-source platform tailored for LLM engineering, focusing on three core areas:

- Observability: Gain deep insights into your LLM’s performance and behavior.

- Metrics: Track key performance indicators (KPIs) to ensure your models are meeting expectations.

- Prompt Management: Efficiently manage and optimize prompts to improve model outputs.

Langfuse is designed to be developer-friendly, offering a seamless integration process and a robust set of features that cater to both small-scale projects and enterprise-level deployments.

Key Features of Langfuse

- Open-Source: Langfuse is fully open-source, allowing for complete transparency and customization.

- Real-Time Observability: Monitor your LLM’s performance in real-time, with detailed logs and analytics.

- Comprehensive Metrics: Track a wide range of metrics, including accuracy, latency, and user engagement.

- Prompt Versioning: Easily manage different versions of prompts and compare their performance.

- Collaboration Tools: Facilitate team collaboration with shared dashboards and role-based access control.

- Scalability: Designed to scale with your needs, from small projects to large enterprise deployments.

Why Choose Langfuse?

1. Open-Source Flexibility

Being open-source, Langfuse offers unparalleled flexibility. You can customize the platform to fit your specific needs, and the community-driven development ensures continuous improvement and innovation.

2. Comprehensive Observability

Langfuse provides real-time observability, allowing you to monitor your LLM’s performance and behavior closely. This is crucial for identifying issues early and ensuring optimal performance.

3. Advanced Prompt Management

Managing prompts can be a complex task, but Langfuse simplifies it with features like prompt versioning and performance comparison. This allows you to optimize your prompts for better model outputs.

4. Scalability

Whether you’re working on a small project or managing a large enterprise deployment, Langfuse is designed to scale with you. Its architecture ensures that it can handle increasing loads without compromising performance.

Langfuse vs Competitors

To help you understand how Langfuse stacks up against other tools in the market, here’s a comparison table:

| Feature | Langfuse | Competitor A | Competitor B |

|---|---|---|---|

| Open-Source | Yes | No | Yes |

| Real-Time Observability | Yes | Yes | No |

| Prompt Management | Advanced | Basic | Moderate |

| Metrics Tracking | Comprehensive | Limited | Comprehensive |

| Scalability | High | Moderate | High |

| Community Support | Strong | Limited | Moderate |

Getting Started with Langfuse

Getting started with Langfuse is straightforward. Here’s a quick guide:

- Installation: Clone the Langfuse repository from GitHub and follow the installation instructions.

- Integration: Integrate Langfuse with your existing LLM setup using the provided SDKs and APIs.

- Configuration: Configure your observability and metrics settings to start tracking your LLM’s performance.

- Optimization: Use the prompt management tools to optimize your prompts and improve model outputs.

Conclusion

Langfuse is a powerful open-source platform that addresses the key challenges of LLM engineering. With its focus on observability, metrics, and prompt management, it offers a comprehensive solution for developers and businesses alike. Whether you’re just starting out with LLMs or managing a large-scale deployment, Langfuse provides the tools you need to succeed.

Ready to take your LLM engineering to the next level? Explore Langfuse today and see how it can transform your AI projects.

Call to Action:

If you’re looking for a fully managed service to deploy and manage Langfuse or any other open-source software, visit OctaByte today. Let us handle the technical complexities so you can focus on what matters most—building amazing AI solutions.